About the article

DOI: https://www.doi.org/10.15219/em54.1098

The article is in the printed version on pages 75-85.

Download the article in PDF version

Download the article in PDF version

How to cite

K. Swan, S. Day, L. Bogle, T. van Prooyen, AMP: a tool for characterizing the pedagogical approaches of MOOCs, „e-mentor” 2014, nr 2 (54), s. 75-85, http://www.e-mentor.edu.pl/artykul/index/numer/54/id/1098.

E-mentor number 2 (54) / 2014

Table of contents

- Introduction

- Context

- The AMP Tool

- Methodology

- MOOC Reviews

- Discussion

- References

- Appendix A

- Appendix B

- Appendix C

About the authors

Footnotes

1 A. Sfard, On two metaphors for learning and the dangers of choosing just one, „Educational Researcher” 1998, Vol. 27, No. 4, pp. 4-13.

2 M. Bousquet, Good MOOCs, bad MOOCs, „Chronicle of Higher Education”, 25.07.2012, http://chronicle.com/blogs/brainstorm/good-moocsbad-moocs/50361.

3 J.K. Waters, What do massive open online courses mean for higher ed?, „Campus Technology” 2013, Vol. 26, No. 12, http://camputechnology.com/Home.aspx.

4 T.L. Friedman, Revolution hits the universities, „The New York Times”, 26.01.2013, http://www.nytimes.com/2013/01/27/opinion/sunday/friedman-revolution-hits-the-universities.html?_r=0.

5 M.Y. Vardi, Will MOOCs destroy academia?, „Communications of the ACM” 2012, Vol. 55, No. 11, p. 5.

6 D. Glance, M. Forsey, M. Riley, The pedagogical foundations of massive open online courses, „First Monday”, May 2013, http://firstmonday.org/ojs/index.php/fm/article/view/4350/3673.

7 P. Norvig, The 100,000-student classroom, 2012, http://www.ted.com/talks/peter_norvig_the_100_000_student_classroom.html.

8 S. Orn, Napster, Udacity, and the academy Clay Shirky, 2012, http://www.kennykellogg.com/2012/11/napster-udacity-and-academy-clayshirky.html.

9 J. Lu, N. Law, Online peer assessment: Effects of cognitive and affective feedback, „Instructional Science” 2012, Vol. 40, No. 2, pp. 257-275; R.J. Stiggins, Assessment crisis: The absence of assessment for learning, „Phi Delta Kappan” 2002, Vol. 83, No. 10, pp. 758-765; J.W. Strijbos, S. Narciss, K. Dünnebier, Peer feedback content and sender's competence level in academic writing revision tasks: Are they critical for feedback perceptions and efficiency?, „Learning and Instruction” 2010, Vol. 20, No. 4, pp. 291-303.

10 A. Darabi, M. Arrastia, D. Nelson, T. Cornille, X. Liang, Cognitive presence in asynchrnous online learning: A comparison of four discussion strategies, „Journal of Computer Assisted Learning” 2011, Vol. 27, No. 3, pp. 216-227; Q. Li, Knowledge building community: Keys for using online forums, „TechTrends” 2004, Vol. 48, No. 4, pp. 24-29; B. Walker, Bridging the distance: How social interaction, presence, social presence, and sense of community influence student learning experiences in an online virtual environment, Ph.D. dissertation, University of North Carolina, 2007, http://libres.uncg.edu/ir/uncg/f/umi-uncg-1472.pdf.

11 L. Yuan, S. Powell, MOOC's and Open Education: Implications for Higher Education, Centre for Educational Technology & Interoperability Standards, 2013, http://publications.cetis.ac.uk/2013/667, p. 11.

12 T.C. Reeves, J.G. Hedberg, MOOCs: Let's get REAL, „Educational Technology” 2014, Vol. 54, No. 1, pp. 3-8.

13 A.J. Romiszowki, What's really new about MOOCs?, „Educational Technology” 2013, Vol. 53, No. 4, pp. 48-51.

14 T. Reeves, Evaluating what really matters in computer-based education, 1996, http://www.eduworks.com/Documents/Workshops/EdMedia1998/docs/reeves.html#ref10, p. 1.

15 J.G. Holland, B.F. Skinner, The analysis of behavior: A program for self-instruction, McGraw-Hill, New York 1961.

16 A. Sfard, op.cit.

17 J.G. Holland, B.F. Skinner, op.cit.

18 K. Swan, M. Mitrani, The changing nature of teaching and learning in computer-based classrooms, „Journal of Research on Computing in Education” 1993, Vol. 26, No. 1, pp. 40-54.

19 T. Levin, After setbacks, online courses are rethought, „The New York Times”, 10.12.2013, http://www.nytimes.com/2013/12/11/us/after-setbacks-online-courses-are-rethought.html.

AMP: A tool for characterizing the pedagogical approaches of MOOCs

Karen Swan, Scott Day, Leonard Bogle, Traci van Prooyen

Introduction

This article reports on the development and validation of a tool for characterizing the pedagogical approaches taken in MOOCs (Massive Open Online Courses). The Assessing MOOC Pedagogies (AMP) tool characterizes MOOC pedagogical approaches on ten dimensions. Preliminary testing on 17 different MOOCs demonstrated >80% inter-reliability and the facility of the measure to distinguish differing pedagogical patterns. The patterns distinguished crossed content areas and seemed to be related to what Sfard1 termed metaphors for learning - acquisition vs. participation.

Since the development of the first Massive Open Online Course (MOOC) pioneered by George Siemens and Stephen Downes of Canada in 20082, an explosion of course offerings have emerged in the United States, engendering a great deal of debate3. MOOCs have come to be viewed by some as the savior of higher education4, and by others as the harbinger of its ultimate demise5.

Empirical evidence on the effectiveness of MOOC's pedagogy is hard to find. However, some of the pedagogical strategies used in MOOCs have been consciously adapted from other contexts6. These commonly used strategies include: lectures formatted into short video's7; videos combined with short quizzes8; automated assessments; peer/self-assessments9; and online discussions10. In addition, „cMOOC's provide great opportunities for non-traditional forms of teaching approaches and learner-centered pedagogy where students learn from one another”11.

Because the mainstream media seems to have mistaken MOOCs for online learning in general, and because not even all MOOCs are the same, it is important to distinguish among them. We believe that this should be the first step in the „research, evaluation, and assessment of learning” in MOOCs for which Reeves and Hedberg argue12. Even though it may be, as Romiszowski13 notes, that most MOOCs have not been designed to take advantage of the affordances of sophisticated instructional designs or advances in learning technologies, we agree with Reeves and Hedberg that we should begin by investigating their designs for learning.

In this paper we describe the development of an instrument that characterizes the pedagogical approaches taken by individual MOOCs along ten dimensions. Hopefully, the instrument which we are calling AMP (Assessing MOOC Pedagogies), will allow us to categorize MOOCs by their learning designs. Much has been written about MOOCs, pro and con, but little has been done to empirically review the pedagogical approaches actually taken by specific MOOCs. It should be noted that our goal is to characterize, not evaluate, MOOC pedagogies. Hopefully, once a tool for describing these pedagogies is designed and tested, empirical evidence can distinguish between more and less effective pedagogies.

Context

The development of the AMP tool began with work done by the American Council on Education's College Credit Recommendation Service (ACE CREDIT) to review MOOCs for college credit. The project was funded by the Gates Foundation, and in 2013 ACE CREDIT approved 13 MOOCs for college credit. These included: College Algebra, BioElectricity, Genetics, Pre-Calculus, and Single Variable Calculus from Coursera; Introduction to Artificial Intelligence, Introduction to Computer Science, Introduction to Physics, Introduction to Statistics, Introduction to Parallel Programming, 3-D Modeling and HTML 5 Game Development from Udacity; and Circuits and Electronics from EdX. In general, ACE CREDIT has created exams to test content learning for each of the MOOCs it has approved - ACE CREDIT exams can be taken for $150.00 thus reducing the costs for college credit considerably.

While ACE reviewed MOOCs for content coverage, they subcontracted with the UIS team to develop a tool to categorize the pedagogical approaches taken by the same MOOCs. The research reported in this article deals with the development and validation of that tool and preliminary findings concerning its applicability to reviewing the original 13 ACE approved MOOCs, as well as four non-STEM Coursera MOOCs chosen for convenience and comparison.

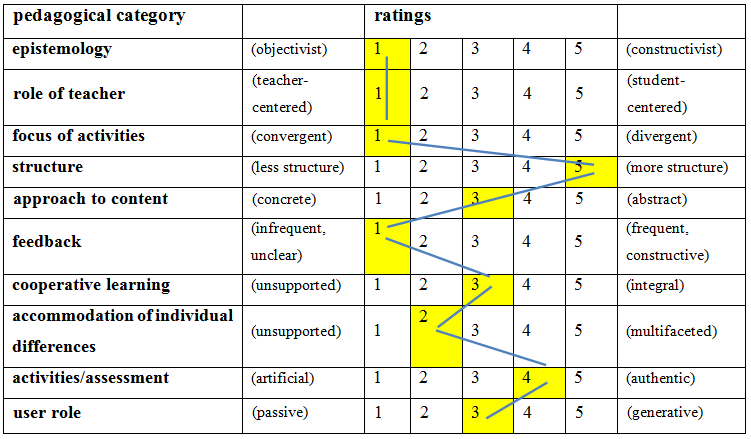

The AMP Tool

The focus of AMP (Assessing MOOC Pedagogies) instrument is on characterizing the pedagogies employed in MOOCs. It is based on a similar tool developed by Thomas Reeves for describing the pedagogical dimensions of computer-based instruction. Reeves wrote, „Pedagogical dimensions are concerned with those aspects of design and implementation [...] that directly affect learning”14. His original CBI tool thus included 14 dimensions focused on aspects of design and implementation that had been shown to directly affect learning. Reviewers were asked to characterize where a particular CBI application fell on a one to ten scale for each dimension.

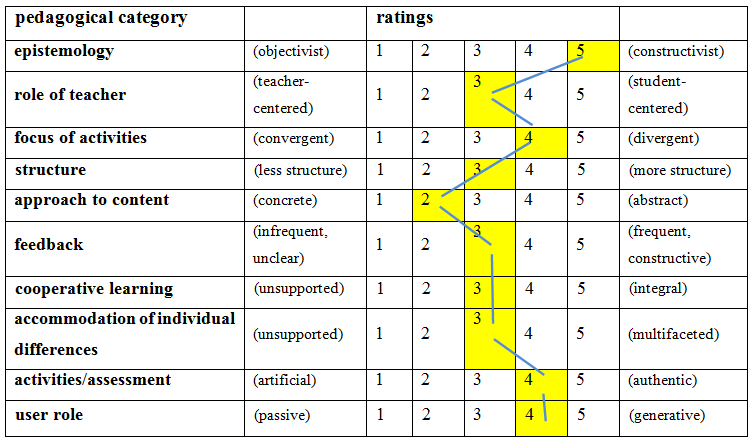

In adapting Reeve's tool, the UIS team retained six of the dimensions - epistemology, role of the teacher, experiential validity (renamed „focus of activities”), cooperative learning, accommodation of individual differences, and user role - albeit adapting these to the MOOC context. They also added four dimensions that seemed important - structure, approach to content, feedback, and activities/assessment. The scale for each dimension was also reduced from ten to five after this was found to result in much better inter-rater reliability. Indeed, we iteratively revised the AMP tool through testing its efficacy to provide consistent reviews. Besides changing the scale, we also developed specific criteria for many of the dimensions. These dimensions are described below:

Epistemology (1=objectivist/5=constructivist)

Objectivists believe that knowledge exists separately from knowing; while constructivists believe that knowledge is „constructed” in the minds of individuals. This leads to differences in pedagogical approaches - instructionists focus on instruction and instructional materials, and absolute goals, whereas constructivists focus on learning and the integration of learners' goals, experiences, and abilities into their learning experiences. Crudely characatured, instructionists see minds as vessels to be filled with „instruction” and what matters is that the instruction is very carefully designed and sequenced; evidence for objectivist approach include a focus on instruction, instructional materials and absolute goals with very carefully designed and sequenced instruction. Constructivists, on the other hand are focused on the design of rich „learning environments” which support discovery learning; evidence for constructivist approaches include a focus on learning and the integration of learners' goals, experiences, and abilities into their learning experiences.

Role of the teacher (1=teacher centered/5=student centered)

Teacher-centered teaching and learning is what it sounds like - focused on what the teacher (or the teaching materials) does; whereas student-centered teaching and learning is focused on what the students do. Directions for this dimension give four criteria for teacher-centeredness - one size fits all, deadlines are set and firm, automated grading with little or no human response, one-way communication from instructor - and suggest that a rating of 1= all criteria met; 2=3/4 criteria met, 3=2/4 criteria met; 4=1/4 criteria met; 5 = no criteria met. Other indicators of student-centeredness (5) include: choice in ways of indicating acquistion of knowledge; self-paced, generative assessments robust discussion boards that are responded to or graded (valued).

Focus of activities (1=convergent/5=divergent)

Convergent learning is learning that „converges” on a single correct answer. A lot of activities in the STEM disciplines are of this sort. In contrast, a lot of activities in the humanities emphasize divergent learning, in which learners explore, and defend, what Judith Langer called a „horizon of possibilities”. The focus of activities is rated convergent (1) if all answers are either right or wrong and there are no alternatives; and 2 if there is more than one path to single right answer. The focus of activities is rated divergent (5) if most questions can be answered multiple ways; and 4 if a majority of questions suggest multiple correct answers. A rating of 3 indicates a balance between convergent and divergent activities.

Structure (1=less structured/5=more structured)

The structure dimension describes the level and clarity of structure in the MOOC. Four criteria are provided that indicate more structure: clear directions, transparent navigation, consistent organizations of the units, and consistent organization of the presentation of the material from unit to unit. A less structured MOOC (1) would exhibit none of these characteristics, while it would be assigned a 2 for 1/4 charactersistics, a 3 for 2/4 characteristics, a 4 for 3/4 characteristics, and be deemed very structured (5) if all 4 characteristics were found.

Approach to content (1=concrete/5=abstract)

The ratings for this pedagogical dimension are not intended to reflect whether the subject matter is abstract or concrete; rather, it examines whether the material is presented in an abstract or concrete way. Concrete presentations (1) would include giving examples of how this subject relates to or is used in the real world or in everyday life and corresponding activities or assessments that ask student to apply general concept to specific situation. Abstract presentations (5) are given seemingly the belief that the material is self-explanatory (i.e., a mathematical formula is presented with the assumption that by its mere presentation the student will understand the values rather than supporting the formula with examples that show how it relates to the real world. Presentations that fall in the middle (3) might include those in which concrete analogies are used to make abstract ideas more understandable.

Feedback (1=infrequent, unclear/5=frequent, constructive)

In online learning, feedback from the instructor and/or peers takes on an expanded role because it represents a major area of communication and interaction around course concepts. Feedback can however come from the program itself as well as from instructors and peers. This dimension is also about the usefulness of the feedback provided, and four criteria are provided to judge this. These include whether or not the feedback is: immediate, clear (right answer but no explanation), constructive (explanation), personal (something that directly address what the student did). The MOOC is rated a 5 on this dimension if all 4 criteria are present, a 4 if 3/4 criteria are identified, a 3 if 2/4 criteria are found, a 2 if there is only 1 criterion present, and a 1 if none of the criteria are in evidence.

Cooperative learning (1=unsupported/5=integral)

This dimension examines the extent of cooperative learning in the MOOC. The criteria for this dimension include: meetups/discussion boards are encouraged, cooperative learning is employed as a teaching strategy, assessment of collaborative work is evident and/or valued, group activities are a main part of the course. If all four criteria are met, 5 points are given. If none of the criteria are met the rating would be a 1. If 1 criterion is met, a 2 is assigned, if 2 criteria are met a 3 is assigned, and so on.

Accomodation of individual differences (1=unsupported/5=multifaceted)

Although it might be assumed that MOOCs would be accommodating to individual differences among learners, this is not always the case. Some MOOCs make very little, if any, provision for individual differences whereas others are designed to accommodate a wide range of individual differences including personalistic, affective, and physiological factors (Ackerman, Sternberg, and Glaser, 1989). A rating of multifaceted (5) on this dimension would indicate all four of the following criteria are met: self-directed learning, verbal and written presentations by instructor, opportunities for students to present answers to material in variety of ways, and universal design. If none of the criteria are met the rating would = 1. If 1 criterion is met, the rating will be a 2, and so on.

Activities/Assignments (1=artificial/5=authentic)

Brown, Collins, and Duguid (1989) argued that knowledge, and hence learning, is situated in the context in which it is developed, and that instructional activities and assessments should therefore be situated in real world activities and problems. They call such activities and assessments „authentic” and contrast them with typical school activities which they deem artificial because they are contrived. Evidence of artificial approaches (1) are activities and assessments which ask for declarative knowledge, formulas, rules, or definitions. Evidence of authentic approaches (5) include presentations with authentic examples that the instructor works through for the students, activities and assesments that regularly involve real world problems. MOOCs in which instructor presentations include real world examples that the instructor works through for students and/or some activities and assessments involve real world problems would be rated a 3.

User role (1=passive/5=generative)

Hannafin (1992) identified what he saw as an important distinction of learning environments. He maintained that some learning environments are primarily intended to enable learners to „access various representations of content”. He labels these „mathemagenic” environments but we will call them passive because that seems more understandable. Other learning environments, called „generative” by Hannafin, engage learners in the process of creating, elaborating or representing knowledge themselves. On this dimension the user role is deemed passive (1) when the learner role is primarily to access various instructor presentation and other course materials and generative (5) when the learner role is primarily to generate content. In the context of MOOCS, support for meet-ups and other study groups is seen as placing the MOOC in the middle on this dimension (3).

The AMP tool includes fields for identifying the MOOC title, instructor(s), platform/university offering the course, subject area, level/prerequisites, length, and time required. Reviewers are also asked to provide a general description of the MOOC and to describe its use of media and the types of assessment used in it. Examples of completed MOOC reviews are provided in Appendices A, B, and C.

Methodology

After initial revisions of the AMP instrument (which included reducing the scales from 10 to 5 points and adding criteria to some dimensions to make distinguishing ratings easier), four reviewers independently reviewed these first thirteen MOOCs they were given, and then met to see if they could come to consensus on their ratings. Initial inter-rater reliability across measures was >80% on all MOOCs but this was increased to 100% through consensus as reviewers met and went over their decisions. The MOOC review process is described in the following section.

MOOC Reviews

Thus far researchers in the AMP group have reviewed nine Coursera, seven Udacity and one EdX MOOC. They began with thirteen MOOCs that were approved for credit by the American Council on Education. These courses included: College Algebra, BioElectricity, Genetics, Pre-Calculus, and Single Variable Calculus from Coursera; Introduction to Artificial Intelligence, Introduction to Computer Science, Introduction to Physics, Introduction to Statistics, Introduction to Parallel Programming, 3-D Modeling and HTML 5 Game Development from Udacity; and Circuits and Electronics from EdX. It is important to note that all of these courses involve STEM (science, technology, engineering, mathematics) disciplines.

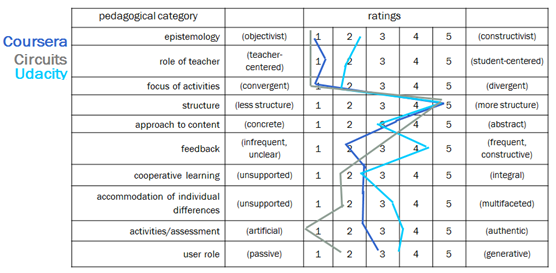

Ratings for each set of these first courses were quite similar. Table 1 gives the average ratings for these initially evaluated courses by platform, between which there were some slight, but clear, differences. Interestingly, while Coursera MOOCs followed a format that resembles the traditional lecture/text - testing routine spread over multiple weeks with hard deadlines of traditional university courses, Udacity courses all followed a format that resembles nothing so much as the programmed learning approach developed by B.F. Skinner15>. Udacity courses accordingly tended to fall slightly more in the middle of the ratings than Coursera courses (see Figure 1). Only one course, Circuits, was available for review from EdX (Figure 1) so not much can be inferred about that platform, but Circuits was very much like the Coursera courses in both obvious format and pedagogical ratings.

| COURSERA | UDACITY | EDX | |

| epistemology | 1.0 | 2.4 | 1.0 |

| role of teacher | 1.4 | 2.0 | 1.0 |

| focus of activities | 1.0 | 1.9 | 1.0 |

| structure | 5.0 | 4.9 | 5.0 |

| approach to content | 3.6 | 3.0 | 4.0 |

| feedback | 2.0 | 4.3 | 3.0 |

| cooperative learning | 2.8 | 2.1 | 2.0 |

| accommodation of individual differences | 2.6 | 3.0 | 2.0 |

| activities/assessment | 2.6 | 3.3 | 1.0 |

| user role | 3.0 | 3.1 | 2.0 |

Source: own study.

The Coursera courses approved for ACE credit, as well as EdX's Circuits, tended to be objectivist, teacher-centered, convergent, highly structured, more abstract than concrete, with minimal feedback. The courses tended to fall somewhere in the middle between supporting and not supporting cooperative learning, accommodating and not accommodating individual differences, artificial and authentic activities/assessment, and passive and active user roles.

Udacity courses tended to be neither objectivist nor constructivist, slightly less teacher-centered and convergent than Coursera courses, highly structured, halfway between abstract and concrete, with immediate, clear, and constructive. They also tended not to support cooperative learning, but because of their self-directed approach were quite accommodating of individual differences. Udacity also made an effort to develop authentic activities, and perhaps because of the large number of computer science courses supported a more generative than the ACE-approved courses from Coursera and EdX.

Source: own study.

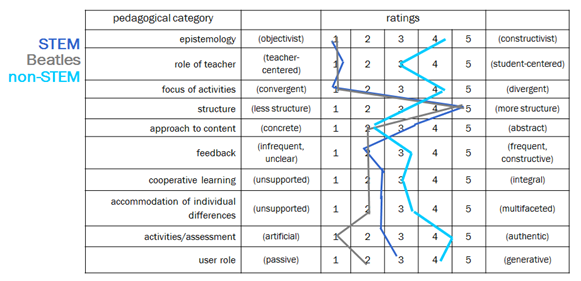

| COURSERA STEM |

UDACITY NON-STEM |

EDX W/O BEATLES |

|

| epistemology | 1.0 | 3.8 | 4.7 |

| role of teacher | 1.4 | 2.5 | 3.0 |

| focus of activities | 1.0 | 3.5 | 4.3 |

| structure | 5.0 | 3.8 | 3.3 |

| approach to content | 3.6 | 2.5 | 2.7 |

| feedback | 2.0 | 3.0 | 3.3 |

| cooperative learning | 2.8 | 2.8 | 3.0 |

| accommodation of individual differences | 2.6 | 3.0 | 3.3 |

| activities/assessment | 2.6 | 3.8 | 4.7 |

| user role | 3.0 | 3.8 | 4.3 |

Source: own study.

Source: own study.

With the exception of The Music of the Beatles, the non-STEM Coursera courses tended to be constructivist, more student centered and divergent, but less structured than their STEM counterparts. Although similar in their approach content, the non-STEM courses had more personal and a greater variety of feedback, were more supportive of cooperative learning, were more accommodating of individual assessment choices, hence more authentic and so supported a considerably more engaged user role.

Discussion

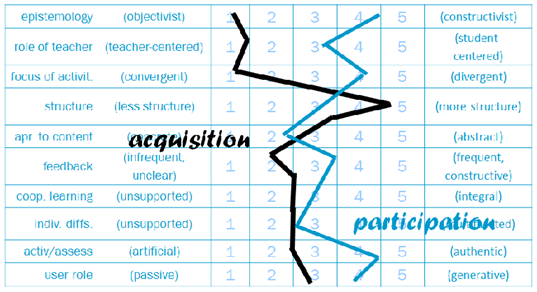

The comparison of STEM and non-STEM MOOCs, and the way the Beatles course seems to fit with the STEM and not the non-STEM MOOCs leads to the revelation of two distinct patterns of pedagogical approaches that can be identified among MOOCs. These seem to relate to what Anna Sfard16 identified as two metaphors for learning - the acquisition metaphor and the participation metaphor. In the acquisition metaphor, learning is seen as acquiring knowledge from outside the individual. In the participation metaphor, individuals collaboratively construct knowledge. Thus the two patterns identified in our preliminary research are most divergent in terms of epistemology, with other dimensions that follow from epistemology such as focus of activities, activities and assessments, and the role of the teacher and student.

Source: own study.

Our ongoing work with the AMP tool suggests that, in its present form, it can be used to distinguish among MOOC pedagogical approaches, and that it can do so with good consistency among raters. Indeed, inter-rater reliability has only improved over time even as we have sought out different sorts of MOOCs to review. Future work should test whether others can use it with similar consistency.

Our preliminary MOOC reviews, while finding some differences between the major platforms and between disciplinary areas found that most of the courses we reviewed merely replicated traditional college teaching in a virtual format. We expect that MOOCs will become more sophisticated, however, as they evolve. Future work will explore such potential evolution as well as more courses and differing platforms.

The Udacity MOOCs we investigated did have an interesting technological interface that was similar to B.F. Skinner's programmed instruction17. Udacity MOOCs forced users to test their understanding before moving on and had no forced due dates which supported self-directed learning. However, the Udacity interface was not as sophisticated as most of the computer-assisted instructional programs developed in the 1980s and 1990s18, nor similarly grounded in the learning sciences. Udacity's founder, Sebastian Thrun, has recently announced that the company is rethinking its approaches after a very public setback in a partnership with San Jose State19. Udacity will be worth watching.

Indeed, MOOCs developed by other groups will also be worth watching, especially those designated cMOOCs. Future research will try to use the AMP tool to summarize the pedagogical approaches of such offerings. More importantly, future research will seek to characterize classes of MOOC pedagogies and to link these to retention and student learning, perhaps within categories of both subject areas and learners.

The rapid growth of MOOCs has presented a pedagogical and design challenge that needs to be addressed as these courses continue to be developed at an expanding rate. The need to identify course designs that address student needs and increase student retention without overwhelming instructors is important. Our research is a first step in this direction.

References

- M. Bousquet, Good MOOCs, bad MOOCs, „Chronicle of Higher Education”, 25.07.2012, http://chronicle.com/blogs/brainstorm/good-moocsbad-moocs/50361.

- Coursera hits 1 million students across 196 countries, http://blog.coursera.org/post/29062736760/coursera-hits-1-millionstudents-scross-196-countries.

- A. Darabi, M. Arrastia, D. Nelson, T. Cornille, X. Liang, Cognitive presence in asynchrnous online learning: A comparison of four discussion strategies, „Journal of Computer Assisted Learning” 2011, Vol. 27, No. 3, pp. 216-227.

- T.L. Friedman, Revolution hits the universities, „The New York Times”, 26.01.2013, http://www.nytimes.com/2013/01/27/opinion/sunday/friedman-revolution-hits-the-universities.html?_r=0.

- D. Glance, M. Forsey, M. Riley, The pedagogical foundations of massive open online courses, „First Monday”, May 2013, http://firstmonday.org/ojs/index.php/fm/article/view/4350/3673.

- J.G. Holland, B.F. Skinner, The analysis of behavior: A program for self-instruction, McGraw-Hill, New York 1961.

- T. Levin, After setbacks, online courses are rethought, „The New York Times”, 10.12.2013, http://www.nytimes.com/2013/12/11/us/after-setbacks-online-courses-are-rethought.html.

- Q. Li, Knowledge building community: Keys for using online forums, „TechTrends” 2004, Vol. 48, No. 4, pp. 24-29.

- J. Lu, N. Law, Online peer assessment: Effects of cognitive and affective feedback, „Instructional Science” 2012, Vol. 40, No. 2, pp. 257-275.

- K. Masterson, Giving MOOCs some credit, 2013, http://www.acenet.edu/the-presidency/columns-and-features/Pages/Giving-MOOCs-Some-Credit.aspx.

- P. Norvig, The 100,000-student classroom, 2012, http://www.ted.com/talks/peter_norvig_the_100_000_student_classroom.html.

- S. Orn, Napster, Udacity, and the academy Clay Shirky, 2012, http://www.kennykellogg.com/2012/11/napster-udacity-and-academy-clayshirky.html.

- T. Reeves, Evaluating what really matters in computer-based education, 1996, http://www.eduworks.com/Documents/Workshops/EdMedia1998/docs/reeves.html#ref10.

- T.C. Reeves, J.G. Hedberg, MOOCs: Let's get REAL, „Educational Technology” 2014, Vol. 54, No. 1, pp. 3-8.

- A.J. Romiszowki, What's really new about MOOCs? , „Educational Technology” 2013, Vol. 53, No. 4, pp. 48-51.

- A. Sfard, On two metaphors for learning and the dangers of choosing just one, „Educational Researcher” 1998, Vol. 27, No. 4, pp. 4-13.

- R.J. Stiggins, Assessment crisis: The absence of assessment for learning, „Phi Delta Kappan” 2002, Vol. 83, No. 10, pp. 758-765.

- J.W. Strijbos, S. Narciss, K. Dünnebier, Peer feedback content and sender's competence level in academic writing revision tasks: Are they critical for feedback perceptions and efficiency? , „Learning and Instruction” 2010, Vol. 20, No. 4, pp. 291-303.

- K. Swan, M. Mitrani, The changing nature of teaching and learning in computer-based classrooms, „Journal of Research on Computing in Education” 1993, Vol. 26, No. 1, pp. 40-54.

- M.Y. Vardi, Will MOOCs destroy academia? , „Communications of the ACM” 2012, Vol. 55, No. 11, p. 5.

- B. Walker, Bridging the distance: How social interaction, presence, social presence, and sense of community influence student learning experiences in an online virtual environment, Ph.D. dissertation, University of North Carolina, 2007, http://libres.uncg.edu/ir/uncg/f/umi-uncg-1472.pdf.

- J.K. Waters, What do massive open online courses mean for higher ed? , „Campus Technology” 2013, Vol. 26, No. 12, http://camputechnology.com/Home.aspx.

- L. Yuan, S. Powell, MOOC's and Open Education: Implications for Higher Education, Centre for Educational Technology & Interoperability Standards, 2013, http://publications.cetis.ac.uk/2013/667.

Appendix A

INTRODUCTION TO ARTIFICIAL INTELLIGENCE

Sample Review

instructor(s): Sebastian Thrun & Peter Norvig

offered by: Udacity/Stanford University

subject area: Computer Science

level/pre-requisites: Listed as Intermediate: Some of the topics in Introduction to Artificial Intelligence will build on probability theory and linear algebra. You should have understanding of probability theory comparable to that at our ST101: Introduction to Statistics class.

dates/length: 10 Weeks but self-paced

time/week required: 10 Weeks but self-paced

COURSE DESCRIPTION

The objective of this class is to teach the student about modern AI. You will learn about the basic techniques and tricks of the trade. We also aspire to excite you about the field of AI. By the end you will understand the basics of Artificial Intelligence which includes machine learning, probabilistic reasoning, game theory, robotics, and natural language processing.

USE OF MEDIA

The media used is video of basically paper and pencil with a voice-over but like the other Udacity classes it has the embedded quiz feature. Videos, blogs, discussion boards and reponses to the question of the DB.

ASSESSMENT

Small quizzes follow each presentation and a large final quiz follows each section. Videos provided link to instruction when answers are incorrect or student does not know/remember the answer. Assessment is embedded quizzes and problem sets for self-assessment and a single final exam for summative assessment

PEDAGOGY

While pedagogy in this course falls mostly in the middle range on the dimensions reviewed, there are outliers for structure and feedback showing high structure and frequent and constructive feedback.

Epistemology (2). Mostly objectivist. The instruction was done in a way that took complex topics and broke them down into simple parts and each part was immediately followed by a video question. The answers were supplied immediately to these video questions. Focus on learning because of this but in the end the goals were the same for all.

Role of teacher (2). Some interaction and responses. Automated grading. One fits all.

Focus of activities (1). There is only one right answer or set of answers for each problem and quiz. Instruction is very carefully sequenced with embedded quizzes that do not let the user move forward until they get the correct answer; almost like programmed instruction, but there seems a little lighter approach to the world.

Structure (5). All criteria listed are present for this course.

Approach to content (3). Numerous real world examples provided to show relationship to and value of AI (robotic car, translations, game of checkers) answers were based on presentations and in the end only one right answer.

Feedback (5). All identified areas are met. Some discussion of the personal vs nonpersonal response but responses seemed to, at some level, address each person's needs.

Cooperative learning (2). Meetups encouraged placing this at the two level. No collaborative or group work encourged or assessed other than meetups.

Accomodation of individual differences (3). Closed captioning, verbal and written presentations. Self-directed instruction.

Activities/assessment (3). Numerous real world examples. Students still required to give the one (or multiple) correct answers based on the instruction.

User role (3). Meetups and study groups encouraged.

Appendix B

INTRODUCTION TO GENETICS AND EVOLUTION

Sample Review

instructor(s): M. Noor

offered by: Coursera

subject area: Genetics and Evolutionary Biology

level/pre-requisites:

dates/length: 10 weeks

time/week required: 8-10 hours per week

COURSE DESCRIPTION

This course is a general introduction to genetics and evolution. It is „intended to be a broadly accessible, factual, science-based course about the modern practices of genetics and evolutionary biology”. The material is presented through short (10-20 minute) video segments that include major concepts, historical information, real-world examples, basic techniques, and a few worked problems. Assessment is through multiple choice problem sets and exams. Interestingly, „this online course does not include all the material covered in the analogous Duke University course, nor does it offer Duke University course credit upon completion”.

USE OF MEDIA

The major delivery medium for this course is taped lectures. These are video-embedded and voice-over PowerPoints. These have a window that shows the instructor; the instructor has an engaging style. The presentations are short, most are less than 20 minutes and many are less than 10 minutes. They use narrative examples and graphics to illustrate ideas, and focus on specific concepts. Each of the 10 course modules have 5 to 7 of these, which are marked G if they are of general interest (for people who just want to learn something about evolution and genetics) or S if they are more specific (these cover topics needed for course credit). The slides for the lectures are available for download as is a transcript of the audio. Each module also has links to general resources that are extensions to the topic for those interested Recommended (but not required) textbooks are organized by chapters linked to topics. There is also a course wiki with links to extensive resources in a variety of media: books, blogs, web pages, videos in general as well as links by weekly topics and a glossary. There are also links to Wikipedia articles on important genetic scientists and tools that seem to have been added by students.

ASSESSMENT

This course has a midterm and a final exam, each of which are multiple choice, count for 40% of the total grade each, can only be taken once, and are broken into two, 10 question sections which are timed (90 minutes for each section). There are also 8 problem sets worth 2.5% of the total grade each, which can be repeated any number of times before specific due dates with the grade on the last submission being the one that counts. The problem sets are also multiple choice. The student cannot see whether or not his or her answers on the problem sets are correct until after the deadline for the problem sets has passed and correct answers are never provided (but after the deadlines students can try all the options to find the correct answers). Everything is machine scored.

The final grade (40% for midterm, 40% for the final & 20% for 8 problem sets) must be 80% or more for a Verified Certificate or Statement of Accomplishment to be awarded from Coursera. It appears that Duke University will not accept such credit. To be recommended for ACE credit (recommended for 2.0 semester hours of Introduction to Biology or General Science college credit), students must take a separate, proctored, ACE-approved Credit Exam which counts for 80% of the total grade (the same 8 problem sets worth 2.5% each count for the other 20%). However, ACE credit only requires a final grade of 60%.

PEDAGOGY

The ratings on pedagogy indicated a structured, objectivist, teacher-centered approach. There was some encouragement or support for cooperative learning and some use of authentic assessments and a generative user role.

Epistemology (1). This course is heavily instructionist. The materials are carefully designed. There is not an emphasis on integration of learners' experiences or backgrounds. The course consists of 10 modules with multiple video lectures, recommended texts and other materials. In fact, students are asked not to contact the instructor or TAs although TAs do monitor the discussions and respond to student questions. However, the discussions weren't used much by students.

Role of teacher (1). This course is totally teacher and materials centered. The instructor makes an effort to make a number of the concepts accessible to students. All communication between the teacher and TAs and students is one way. The course if very organized and focused on the professor as the expert.

Focus of activities (1). The only activities in this course are problem sets and exams and they are all multiple choice. The assessments and activities are focused on arriving at one correct answer.

Structure (5). The course is very structured, in a good way. All modules are pretty much the same so students know what to expect. The videos are bite-sized and they all start with a nice overview of the content. Problem sets are based on main ideas. The rating for structure is a „5” per the AMP scoring guide because it has all 4 of the criteria required for that rating.

Approach to content (3). There is some of both abstract and concrete approaches to the content. The instructor strives to make the material concrete but because he often uses letters to stand for things it ends up being pretty abstract.

Feedback (1). The only feedback given in this course is corrections for mistakes in the presentations and this is one way. In the sections reviewed, there are no questions answered by either the instructor or the TAs.

Cooperative learning (3). There was support for meet-ups & discussions.

Accomodation of individual differences (2). Closed captioning, but little other accommodation in the main part of the course. On the other hand there is a plethora of resources besides the lectures associated with each unit including text readings (in either a book you can buy or older versions of the text available online for free), the slides and a transcript for each lecture, and a variety of interesting additional material (enrichment) in a variety of media formats.

Activities/assessment (4). Although they are all multiple choice, the assessments in this course ask for the application of course content to real world problems. Instructor also uses real world examples. That said, learning tended toward a passive mode; there are no interactive activities that allow students to explore concepts, although genetics is a great topic for simulations; and questions are handled among students.

User role (3). The role of the learning is mainly to access the material and complete assignments. The user role in this course would be rated as more passive (even the multiple choice problem sets have no feedback) were it not for the possibility of meet-ups.

Appendix C

COMIC BOOKS & GRAPHIC NOVELS

Sample Review

instructor(s): William Kuskin

offered by: Coursera

subject area: literature

level/pre-requisites: none

dates/length: seven weeks

time/week required: 4-7 hours

COURSE DESCRIPTION

„Comic Books and Graphic Novels has four goals. First, it presents a survey of the history of American comics and a review of major graphic novels circulating in the U.S. today. Second, it reasons that as comics develop in concert with and participate in humanist culture, they should be considered a serious art form that pulls together a number of fields - literature and history, art and design, film and radio, and social and cultural studies. Third, it argues that these fields come together materially in the concept of the book and intellectually in the importance of humanism, both of which allow the human mind to transcend the limitations of time. Finally, it concludes that because they remain on a transformative boundary line, comics should remind us that art is generative and that there is always hope”.

This course does what it promises through a series of lectures that include guest lectures from comic collectors, comic book store owners, teachers, and comic artists. The lectures are engaging. The instructor does a good job of introducing students to both comics and literary criticism. The assessments involve peer reviewed essays, two tests, a trip to a comic book store, and the creation of a comic book store. Discussion of assignments is allowed and it is lively, as is collaboration on the comic assignment.

USE OF MEDIA

This course is centered on very well produced lectures. The main lectures given by the have high production values with most focusing on voice over a black screen split between comic examples and an outline of the major points in the lecture relative to the adjacent illustration. There are also interesting interviews with a variety of people involved with comics. Units also include a variety of print materials, references (texts). The units are time-released. The course also includes automated tests, open discussion boards and mechanisms for peer-review.

ASSESSMENT

Assessments have an interesting variety. They include two 750-1000 word essays (20% each); a comic creation project (30%); two tests (15% each); comic shop collaborative (ungraded) and optional discussions (ungraded). What is interesting about this course is that it encourages collaboration in the discussions and allows it in the comic creation.

PEDAGOGY

The pedagogy in this course falls mostly in the middle range on the dimensions reviewed indicating that it falls mostly somewhere between constructivist and instructivist approaches, although it veers slightly toward the constructivist.

Epistemology (5). This course is clearly constructivist and the instructor similarly seems to think knowledge is created in learner's minds (but perhaps according to particular conventions). There are many ways to do assignments and the integration of learner's experiences are encouraged.

Role of teacher (3). This course meets two of the four teacher-centered criteria -- firm deadlines and one-way communication from instructor. A third criterion, one-size fits all is true of the lectures but the assignments give lots of leaway.

Focus of activities (4). This course was more divergent than convergent. There were many possible right answers on the essays and the design of the comic. Tests, however, did have single correct answers.

Structure (3). This course was in the middle on structure. It had clear directions on what to do and transparent navigation in the web pagess, but differential organization of units, presentations, and assignments. Rubrics for peer-review were provided including examples of essays.

Approach to content (2). The approach to this course was more concrete than abstract. Concrete analogies, indeed analysis of comics itself, were used to make abstract ideas like trope, metonomy, genre, etc. more understandable. There were also a variety of assignments of ways of demonstrating understanding.

Feedback (3). Feedback was not immediate, and the peer feedback was not as clear as it might be because the students didn't understand the rubric. It was however constructive and personal. Feedback on the tests isn't immediate either, but it is clear and constructive.

Cooperative learning (3). Meetups and use of the discussion boards was encouraged. Discussions around assignments are supported, group activities are part of main course in that learners can do collaborative comics. In addition, the major grading in the course involves peer review.

Accomodation of individual differences (3). cIs somewhere between unsupported and multi-faceted in that it meets two of the four criteria - closed captioning; and the provision of a diversity of activities and assessments (essays, tests, & comic design).

Activities/assessment (4). This course is more authentic than artificial. It is not clear how "authentic" literary criticism applied to comics is, but the instructor does work through examples and gives students examples to do the same with actual pages from comics Essays are peer-reviewed and can be discussed in the forums. The design of a comic was very authentic in that it required the use of comic conventions.

User role (4). The user role in this course is more generative than passive in that users are asked to critique comics and create their own and discussions are very open with topics generated by the learners.